During the winter semester 17/18 I took a course called “Multi-sensory Based Dynamic Robot Manipulation” at the Institute for Cognitive Systems at the Technical University of Munich where I am currently pursuing my masters degree in robotics.

The course was one of the best I’ve had in my university career which is mainly because Mr. Dean, our lecturer, did such a great job. It was sometimes painfully hard to get the homework done because I had to learn so many new things at the same time. Coding in C++, using Ubuntu and ROS, control theory, robot dynamics and kinematics… The course had a final project in the end which I did together with two other people in a team.

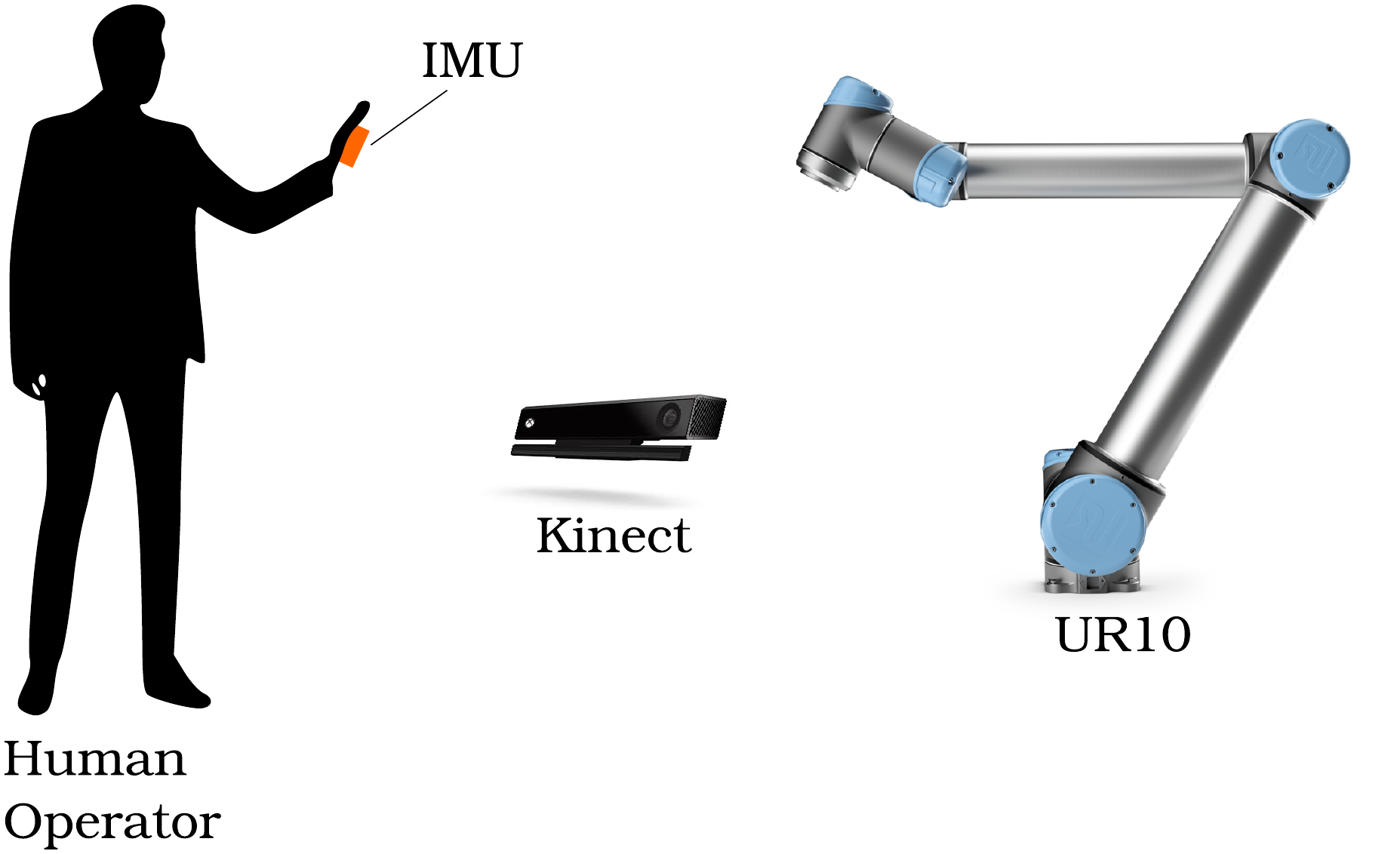

The scope was to use an small industrial robot (Universal Robot 10 in our case) in combination with some sensors to solve a small, open task. We decided to implement a handtracking robot which means that the robot imitates the movement of a human who stands in front of it. We aimed to use an inertial measurement unit to get acceleration readings of the hand and a Microsoft Kinect with its depth camera to get absolute position readings. A schematic of the setup is shown below.

A human operator controls an industrial robot with an IMU in his hand.

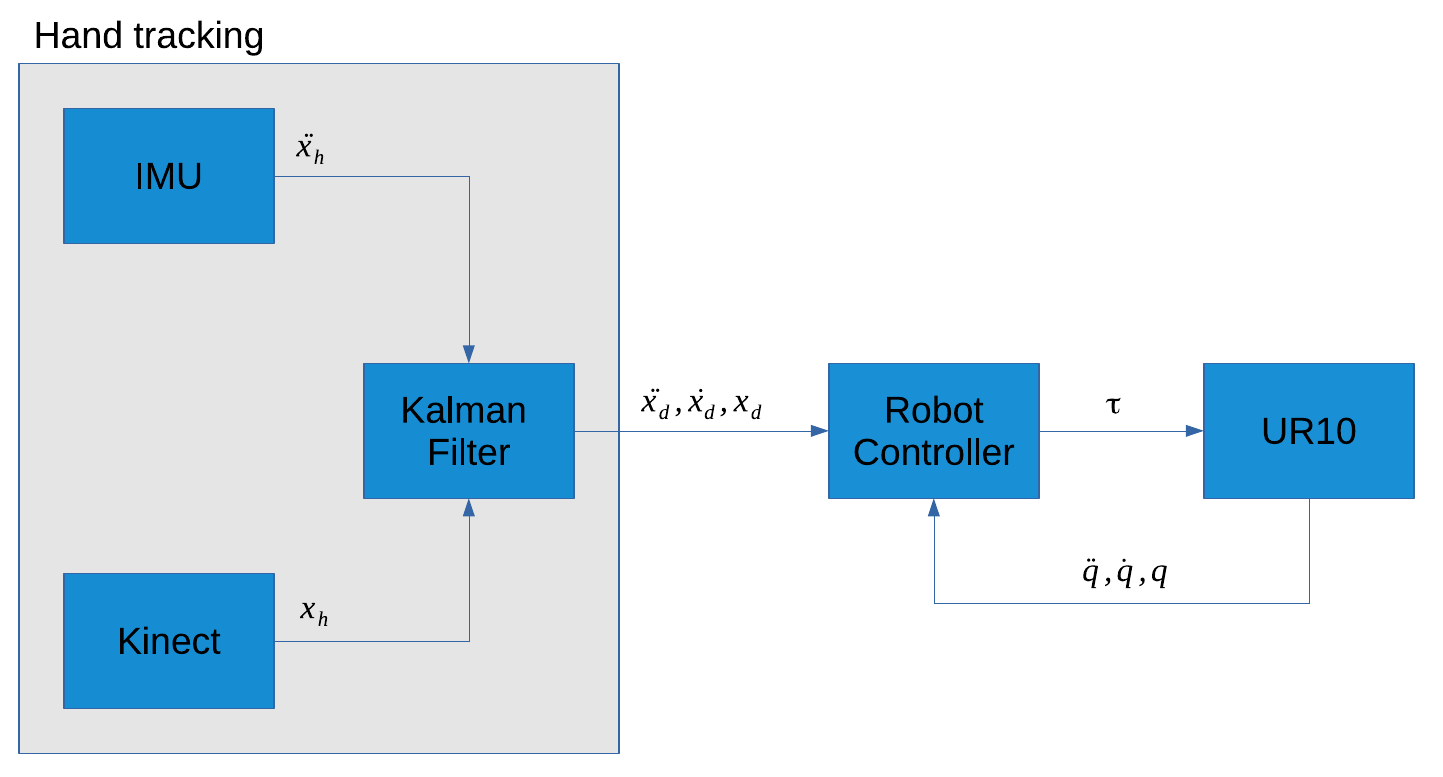

The position and acceleration information of the sensors is fused through a Kalman Filter. The result is then fed into the robot controller which acts on the torque interface of the UR10 robot.

Schematic of the information flow

My part was setting up the IMU and the Kalman Filter, whereas one of my colleagues worked on the Kinect and the other one on the robot controller. We spent hours in the lab but finally got the system working quite nicely. The IMU allows us to have a dynamic control inputs because the sensed acceleration is except some smoothing directly fed into the controller as the desired state. We wrote a little report on the project which you can find here if your interested.